Ashley W.D. Hill

PhD Researcher Engineer specialized in machine learning applied to robotics

CEA

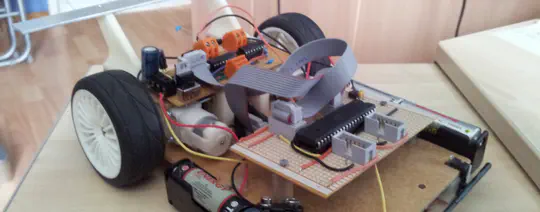

I’m Ashley Hill, a Brit who has spent his life in France. Currently a Researcher Engineer specialized in machine learning applied to robotics. You can usually find me playing around with Machine Learning, Robotics, Electronics, Astronomy, and Programming.

Here is my thesis for the curious.

Projects

Featured Publications

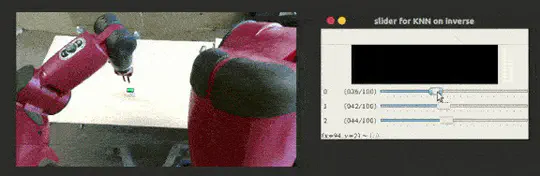

This paper addresses the problem of adapting a control system to unseen conditions, specifically to the problem of trajectory tracking in off-road conditions. Three different approaches are considered and compared for this comparative study: The first approach is a classical reinforcement learning method to define the steering control of the system. The second strategy uses an end-to-end reinforcement learning method, allowing for the training of a policy for the steering of the robot. The third strategy uses a hybrid gain tuning method, allowing for the adaptation of the settling distance with respect to the robot’s capabilities according to the perception, in order to optimize the robot’s behavior with respect to an objective function. The three methods are described and compared to the results obtained using constant parameters in order to identify their respective strengths and weaknesses. They have been implemented and tested in real conditions on an off-road mobile robot with variable terrain and trajectories. The hybrid method allowing for an overall reduction of 53.2% when compared with a predictive control law. A thorough analysis of the methods are then performed, and further insights are obtained in the context of gain tuning for steering controllers in dynamic environments. The performance and transferability of these methods are demonstrated, as well as their robustness to changes in the terrain properties. As a result, tracking errors are reduced while preserving the stability and the explainability of the control architecture.

Stable-Baselines3 provides open-source implementations of deep reinforcement learning (RL) algorithms in Python. The implementations have been benchmarked against reference codebases, and automated unit tests cover 95% of the code. The algorithms follow a consistent interface and are accompanied by extensive documentation, making it simple to train and compare different RL algorithms. Our documentation, examples, and source-code are available at https://github.com/DLR-RM/stable-baselines3.

Recent Publications

Experience

Skills

90%

95%

80%

90%

95%

85%

85%

95%

Bilingual

75%

70%

40%

What I do in my free time

Boldering

Exploring

10 years

Most of my projects

Running a local portainer & hobby coding

Popular Topics

Contact

- contact [at] hill-a [dot] me

- Saclay, Essones 91400